Quality assurance and testing is something that we've had a heavy focus on over the past eighteen months. We introduced Jenkins into our workflow to automatically test our extensions over a matrix of PHP and Magento versions and, overnight, a weight was lifted off our minds.

For one reason or another though, Jenkins hasn't fitted particularly well into our experience with the majority of our client projects.

After hearing a couple of developers sing Bamboo's praises we decided to give it a go on our latest project, and it's great.

Objectives

Our objectives in a CI/CD are the following:

- Feedback to developers: failing tests, code standards violations, etc

- Provide an audit trail for deployments

For testing we run phpunit and behat. For code standards we run phpmd and phpcs with Magento ECG sniffs and extracts from the Judge Project.

For deployments, and visibility in that respect, we used to use Deploy but as part of this project we moved to rocketeer under Bamboo.

Automating your build

Before you even start going down the automated build route, it's highly advisable to have a defined build process using some kind of build file. The alternative to this is shoestringing these commands together into your CI steps and losing crucial visibility. The advantage to the build file route is that you keep your tasks (such as installing composer dependencies, provisioning virtual machines, even the running of the unit tests) under version control and make the process auditable.

For our build process we use phing, a build tool written in PHP that's remarkably similar to ant. It's a bit long, but you're more than welcome to read a variation of the one we use internally.

We've already identified a swathe of changes that we'd like to introduce, so you can expect changes to that in the near future. Specifically, we want to be better at handling the "in vagrant" / "out of vagrant" commands, either specifying that this file should always either be run inside or outsite of the machine, or that the commands use the $USER == 'vagrant' check to decided whether or not to prepend the command with vagrant ssh -c.

Build machine

A build machine is where the actual build will be happening; the machine that will perform the git checkout, run phpunit and all of your other tasks.

One of the main problems with our Jenkins setup is that we had allowed ourselves to become lazy with the build machines. Our machine maintenance consisted of logging into the machine, installing patches and tools as we needed them. This has left us with a mess of a machine that we're afraid to decommission.

The beauty of Bamboo, the "On Demand" version at least, is that it encourages the use of Amazon EC2 (spot instances -- running a machine for $0.01/hour anyone?) to run your builds, and provides a standard set of AMIs to use. So, we used one of their images and went old school, adding the following to the "Instance startup script" field:

export DEBIAN_FRONTEND=noninteractive

apt-get update

apt-get -q -y dist-upgrade

apt-get install -q -y apache2 \

php5 \

php5-mysqlnd \

php5-mcrypt \

php5-intl \

php5-curl \

php5-gd \

php5-json \

libapache2-mod-php5 \

mysql-client-5.5 \

mysql-server-5.5 \

libmysqlclient-dev \

language-pack-en

rm -f /var/www/html/index.html

mysql -uroot -e "CREATE DATABASE magento"

mysql -uroot -e "CREATE DATABASE magento_test"

mysql -uroot -e "CREATE USER 'magento'@'localhost' IDENTIFIED BY 'magento'"

mysql -uroot -e "GRANT ALL ON magento.* TO magento@'%'"

mysql -uroot -e "GRANT ALL ON magento_test.* TO magento@'%'"

mysql -uroot -e "FLUSH PRIVILEGES"

cp /etc/php5/conf.d/mcrypt.ini /etc/php5/apache2/conf.d/

cp /etc/php5/conf.d/mcrypt.ini /etc/php5/cli/conf.d/

wget http://www.phing.info/get/phing-latest.phar -O /usr/local/bin/phing.phar

chmod +rx /usr/local/bin/phing.phar

wget https://raw.githubusercontent.com/colinmollenhour/modman/df531dd29f10e9b0611763eb6d96a8417eef43d2/modman -O /usr/local/bin/modman

chmod +rx /usr/local/bin/modman

wget https://github.com/netz98/n98-magerun/raw/2f48cd476c5bb42a95ae40ebf74e27614fcb14d0/n98-magerun.phar -O /usr/local/bin/n98-magerun.phar

chmod +rx /usr/local/bin/n98-magerun.phar

wget https://phar.phpunit.de/phpunit.phar -O /usr/local/bin/phpunit.phar

chmod +rx /usr/local/bin/phpunit.phar

curl -sS https://getcomposer.org/installer | php -- --install-dir=/usr/local/bin/

chmod +rx /usr/local/bin/composer.phar

ec2-associate-address -i `curl http://169.254.169.254/latest/meta-data/instance-id` -O ANACCESSKEY -W 'ASECRETKEY' an.ip.add.ress

This sets up our environment with all of Magento's dependencies, including a couple of tools that we like to use. Finally it assigns one of our elastic IP addresses to the instances that we have whitelisted for SSH access on our staging and production machines.

Configuring build steps

A build process should fail fast, getting the feedback to the developers as soon as possible. This means the process should be front loaded with the quicker tasks such as offline unit tests, then database dependent tests and then, the much slower, functional tests.

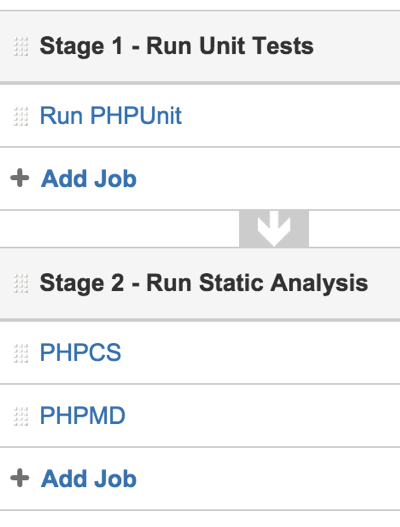

Bamboo groups a "Build Plan" into "Stages". A "Stage" consists of "Jobs". Jobs within Stages can be run in parallel, but stages are built sequentially. Above is our build process as it stands. Our static analysis process runs after the unit tests because they don't have mechanisms for failing the build, they are used purely for reporting. When we are ready for our functional tests, they will be added as "Stage 2", and the static analyses will be moved to "Stage 3".

The "Run PHPUnit" task:

- Performs a checkout of the git repository

- Installs composer dependencies

- Installs Magento

- Runs phpunit

- Publishes junit test results to Bamboo

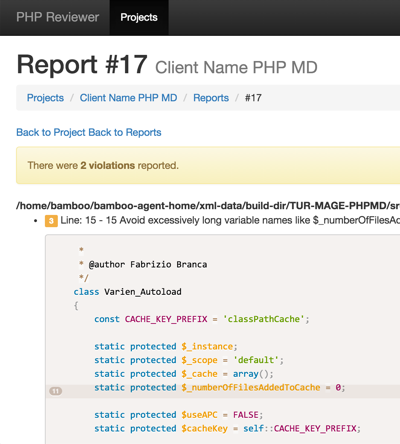

As an example the "PHPMD" task does the following:

- Performs a checkout of the git repository

- Installs composer dependencies

- Runs PHPMD

- Publishes the results to an internal tool

We couldn't easily find a way within Bamboo to display feedback from phpmd nor phpcs, so I spent a couple of nights building a tool to do just that.

Managing the database

We've always found managing customer databases a pain in the ass, and usually resort to having a shared drive that contains the latest versions of a customer's database with all confidential information removed.

For this project, we've decided to more tightly couple the database and the project source, using a submodule to store the database. We've tried a number of mechanisms of storing the customer database over the years and thought it was time that we gave this one a fair shot.

To make this work, we think there are a couple of rules that you need to follow.

- Don't commit binary files (such as

.sql.gzto the repository) as it will blow up the size of the repo, and diffs will be meaningless. - Use a standard command to export the database so that the order of the

CREATEandINSERTstatements are always the same, reducing the size of the diffs and making them meaningful. - Don't store tables that contain sensitive information, such as customers and orders, it's just not worth it. The brand damage to your company, and your customer's company, caused by a data leak, not to mention the potential court case does not bear thinking about.

- Don't store tables that contain transient data, such as log tables, as they'll increase the size of the database.

To standardise the export command we opted for magerun's db:dump command along with --strip=@development, grabbing only the tables we need. We wrapped this into a phing task, along with a restore task to ensure that developers maintain standard practice while working on the machine.

<!-- ===================================================== -->

<!-- Create a database dump -->

<!-- ===================================================== -->

<target name="dev:db:dump" depends="check:not_running_in_vagrant" description="Create a database dump">

<exec command="${vagrant-run} 'cd ${vhost.dir}; php vendor/bin/n98-magerun --root-dir=${vhost.dir}/src/ db:dump --strip=@development --human-readable --stdout' > ${database.dir}/database.sql" passthru="true" />

<echo message="Dump has been output to ${database.dir}/database.sql, commit and push submodule to publish changes" />

</target>

<!-- ===================================================== -->

<!-- Restores a database dump -->

<!-- ===================================================== -->

<target name="dev:db:restore" depends="check:not_running_in_vagrant" description="Restores a database dump">

<echo message="Fetching latest database" />

<exec command="git submodule init" dir="${project.basedir}" passthru="true" />

<exec command="git submodule update" dir="${project.basedir}" passthru="true" />

<exec command="git pull origin master" dir="${database.dir}" passthru="true" />

<echo message="Importing database" />

<exec command="cat database.sql | ${vagrant-run} 'mysql -D${db.name} -u ${db.user} -p${db.pass}'" dir="${database.dir}" passthru="true" />

</target>

Deployments

For deployments we employ rocketeer, a task runner written in PHP. The deployment happens by:

- sshing to the machine

- cloning the repository

- install composer deps

- sharing relevant directories and files (such as

media/,app/etc/local.xml, etc) - enforcing correct file permissions

- then smylinking this new release to the deployed directory

Our previous method of deploying projects used Deploy, but we were keen to move this to a system where we didn't have to rely on the third-party tool to perform a deployment. We wrote our deployment using rocketeer so that we could, if we wanted to, run a deployment right off our development machines. The great thing about Deploy was that we had a great audit trial of deployment history, and now that we have Bamboo we can get that audit trial right down to the ticket level.

One of the very first problems we ran into with EC2 was knowing what IP address to allow through our firewalls. After some digging around we opted for using the ec2-associate-address command (which is bundled into the default Bamboo image) to assign one of our elastic ip addresses at the end of the image setup process. This is not going to scale, and will requre a rethink when we're running more than one build machine. Amazon run an instance metadata service, accessible over HTTP, that allows you to discover information about the running instance. Really usefulf for finding the instance id, for example, that's required as a parameter to ec2-associate-address.

Integrating with Jira

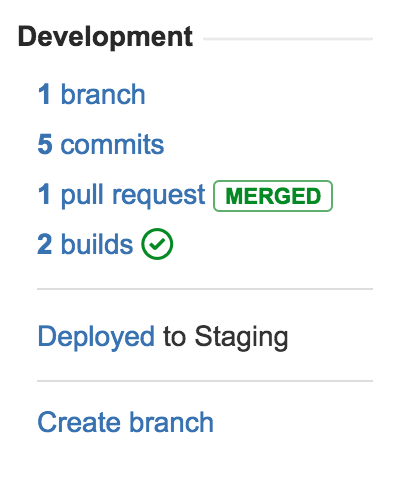

One of the major selling points for the move to Bamboo was the tight integration with Jira. Bamboo builds are mapped automatically to Jira issues (based on branch name) allowing for build information to pulled into the ticket within Jira.

As you can see, right on the ticket in Jira we can now see the build status and where the ticket has been deployed.

Future work

We're still new to this setup, so are expecting this process to evolve until we're happy with it. We need to start thinking about multiple build machines, standardising our build file, thinking about the interaction with vagrant machines, and much more.

Overall we're very happy with how this is running at the moment, and look forward to rolling it out to more projects in the future!